NVIDIA is bringing a host of innovations in rendering, simulation, and generative AI to the upcoming SIGGRAPH 2024 computer graphics conference. The company plans to present over 20 research papers that showcase breakthroughs in areas like diffusion models for visual AI, physics-based simulation, and realistic rendering.

Diffusion Models Enhance Texture Painting and Text-to-Image Generation

NVIDIA's research is advancing the capabilities of diffusion models, a popular tool for transforming text prompts into images. One collaboration, called ConsiStory, introduces a technique called "subject-driven shared attention" that can generate consistent imagery of a main character in just 30 seconds, down from 13 minutes. This is a key capability for storytelling applications like comic strips and storyboards.

Additionally, NVIDIA is building on its previous work on AI-powered texture painting by presenting a new paper that applies 2D generative diffusion models to enable artists to paint complex textures on 3D meshes in real-time.

Breakthroughs in Physics-Based Simulation

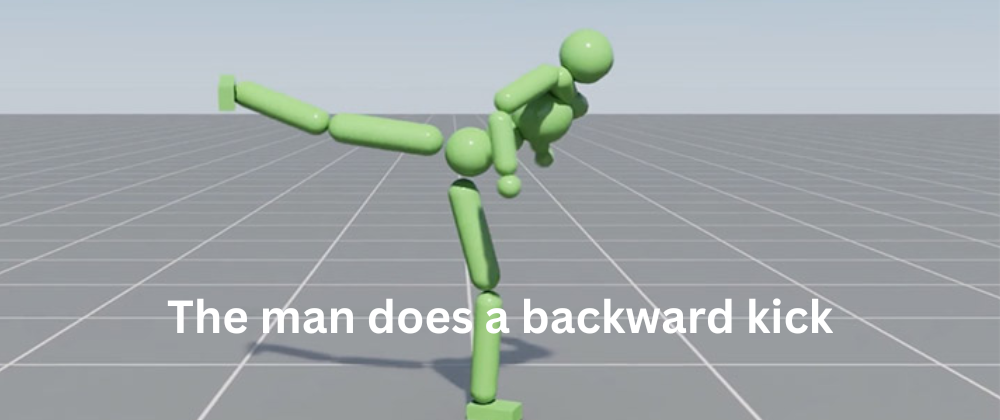

NVIDIA's research is also narrowing the gap between physical objects and their virtual representations through advancements in physics-based simulation. One project, called SuperPADL, uses a combination of reinforcement learning and supervised learning to reproduce the motion of over 5,000 human skills in real-time on consumer GPUs.

Another paper introduces a neural physics method that applies AI to learn how objects would behave when moved in a virtual environment, whether represented as a 3D mesh, a NeRF, or a solid object generated from a text-to-3D model.

Realistic Rendering and Diffraction Simulation Improvements

NVIDIA's research is also raising the bar for realistic rendering and diffraction simulation. A collaboration with the University of Waterloo presents a method that can integrate with path-tracing workflows to simulate diffraction effects up to 1,000 times faster. This could benefit applications like radar simulation for autonomous vehicles.

Additionally, NVIDIA is improving the sampling quality of its ReSTIR path-tracing algorithm, which has been crucial for bringing path-tracing to real-time rendering in games and other applications.

Multipurpose AI Tools for 3D Representation and Design

NVIDIA is also showcasing AI tools for 3D representation and design, including a GPU-optimized framework called fVDB for large-scale 3D deep learning. Another paper, a Best Technical Paper award winner, introduces a unified theory for representing how 3D objects interact with light.

Overall, NVIDIA's research advancements presented at SIGGRAPH 2024 demonstrate the company's continued leadership in pushing the boundaries of simulation, generative AI, and realistic rendering for a wide range of applications.

Latest comments (0)